The following is a copy of what I published in a question on meta.discourse.org about “Enable a CDN for your Discourse“ while working on discuss.webplatform.org.

Setup detail

Our setup uses Fastly, and leverage their SSL feature. Note that in order for you to use SSL too, you’ll have to contact them to have it onto your account.

In summary;

- SSL between users and Fastly

- SSL between Fastly and “frontend” servers. (That’s the IP we put into Fastly hosts configuration, and are also refered to as “origins” or “backends” in CDN-speak)

- Docker Discourse instance (“upstream“) which listens only on private network and port (e.g. 10.10.10.3:8000)

- More than two publicly exposed web servers (“frontend“), with SSL, that we use as “backends” in Fastly

- frontend server running NGINX with an upstream block proxying internal upstream web servers that the Discourse Docker provides.

- We use NGINX’s

keepaliveHTTP header in the frontend to make sure we minimize connections

Using this method, if we need to scale, we only need add more internal Discourse Docker instances, we can add more NGINX upstream entries.

Note that I recommend to use direct private IP addresses instead of internal names. It removes complexity and the need to rewrite Hosts: HTTP headers.

Steps

Everything is the same as basic Fastly configuration, refer to setup your domain.

Here are the differences;

-

Setup your domain name with the CNAME Fastly will provide you (you will have to contact them for your account though), ours is like that ;

discuss.webplatform.org. IN CNAME webplatform.map.fastly.net. -

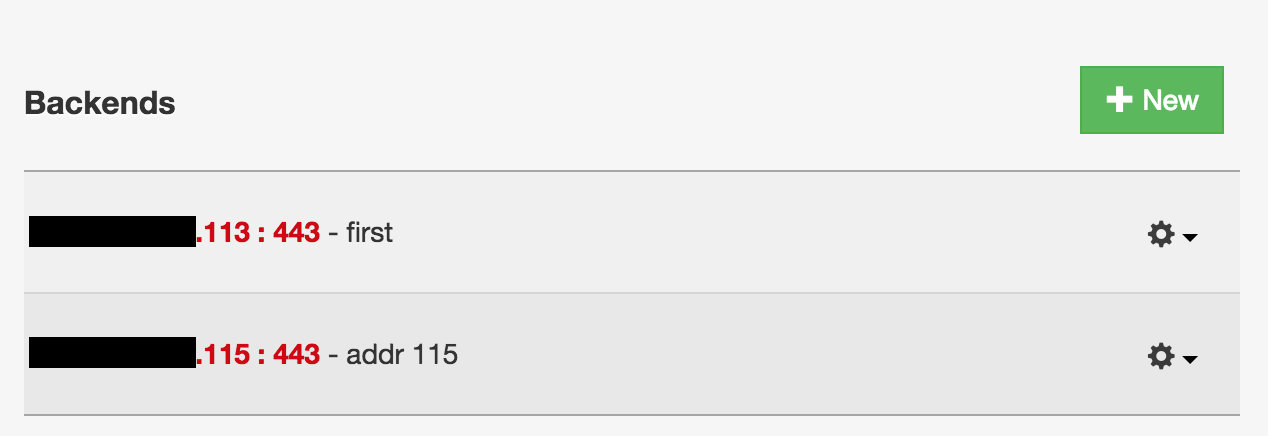

In Fastly pannel at

Configure -> Hosts, we tell which publicly available frontends IPsNotice we use port

443, so SSL is between Fastly and our frontends. Also, you can setup Shielding (which is how you activate the CDN behavior within Fastly) by enabling it on only one. I typically set it on the one I call “first”.

-

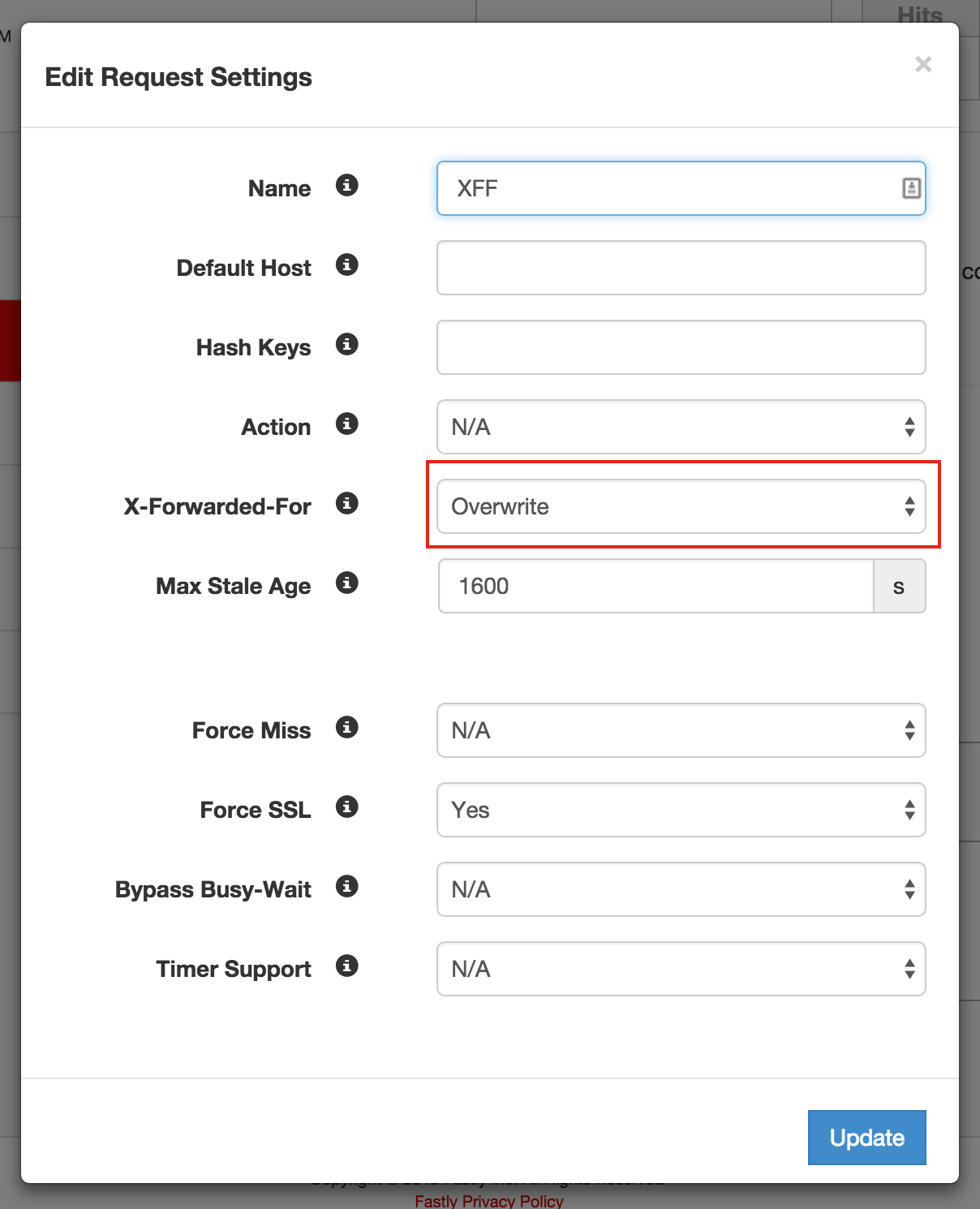

In Fastly pannel

Configure -> Settings -> Request Settings;we make sure we forward. You DONT need this; you can remove it.X-Forwarded-Forheader

-

Frontend NGINX server has a block similar to this.

In our case, we use Salt Stack as the configuration management system, it basically generates the Virtual Hosts for us as using Salt reactor system. Every time a Docker instance would become available, the configuration will be rewritten using this template.

-

{{ upstream_port }}would be at8000in this example -

{{ upstreams }}would be an array of current internal Docker instances, e.g.['10.10.10.3','10.10.10.4'] {{ tld }}would be webplatform.org in production, but can be anything else we need in other deployment, it gives great flexibility.-

Notice the use of

discoursepollingalongside thediscoursesubdomain name. Refer to this post about Make Discourse “long polling” work behind Fastly to understand its purposeupstream upstream_discourse { {%- for b in upstreams %} server {{ b }}:{{ upstream_port }}; {%- endfor %} keepalive 16; } server { listen 443 ssl; server_name discoursepolling.{{ tld }} discourse.{{ tld }}; root /var/www/html; include common_params; include ssl_params; ssl on; ssl_certificate /etc/ssl/2015/discuss.pem; ssl_certificate_key /etc/ssl/2015/201503.key; # Use internal Docker runner instance exposed port location / { proxy_pass http://upstream_discourse; include proxy_params; proxy_intercept_errors on; # Backend keepalive # ref: http://nginx.org/en/docs/http/ngx_http_upstream_module.html#keepalive proxy_http_version 1.1; proxy_set_header Connection ""; } }

Note that I removed the

include proxy_params;line. If you have lines similar toproxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;, you don’t need them (!) -

Comments

Peter

nice tutorial! just in case you want to check this with other CDNs: http://cdncomparison.com

Renoir Boulanger

Thanks for this site, its great! I’ve been through the learning curve with Varnish and VCL. Its my opinion that they got it right and that it feels easier to maintain if your upstream server serves the right headers, and that the provider (Fastly) acts as a CDN.

I’ll try others. But i’m very satisfied with Fastly!